CS 6620.001 "Ray Tracing for Graphics" Fall 2014

Welcome to my ray tracing site for the course CS 6620.001, being taught at the University of Utah in Fall 2014 by Cem Yuksel.

Welcome fellow students! I have lots of experience tracing rays, and with graphics in general, and so I'll be pleased to help by giving constructive tips throughout (and I'll also try very hard to get the correct image, or say why particular images are correct as opposed to others). If you shoot me an email with a link to your project, I'm pretty good at guessing what the issues in raytracers are from looking at wrong images.

Hardware specifications, see bottom of page.

Timing information will look like "(#t #s ##:##:##)" and corresponds to the number of threads used, the number of samples (per pixel, per light, possibly explained in context), and the timing information rounded to the nearest second.

Project 5 - "Triangular Meshes"

To properly implement bidirectional path tracing (BDPT) (which was one of my stated goals prior to taking this class), I need to be able to sample points on the image plane (currently, points on the image plane, and in the optical geometry of the camera, generally, are privileged). To do this right, the camera—all of the camera, not just the lens—should be part of the scene. It's okay if the rest of it doesn't interact, but, for example, all the lenses should be modeled with first-class geometry. This will also have nice effects (e.g. physically based lens flares!).

The reason this is important is a sampling issue. While I could implement BDPT with the "eye" point being at the lens, this is stupid since the BTDF of the lens is a delta function—any light path point you try to connect to the eye point will have a contribution of zero. This makes it, for example, useless for better sampling e.g. directly viewed caustics on a diffuse surface—which by the way is a major attractor for implementing the algorithm in the first place.

To do all that I should implement the lenses as CSG objects, which my raytracer doesn't support. Yet. After implementing CSG, then I'll try to implement BDPT.

The point is, all this will take time, and since I've already had triangle-mesh support and a good acceleration data structure for a while now, I effectively have two weeks to implement it (I also want to leave some time to get ahead, since the project after that is texturing, which my tracer has literally no support in any way for currently). These changes will probably break everything for a little bit, so I want to get these updates out of the way. If I can produce snazzy images of the changes I'm making, I might edit in updates as I go. Until then, you might check out the previous project for pretty pictures.

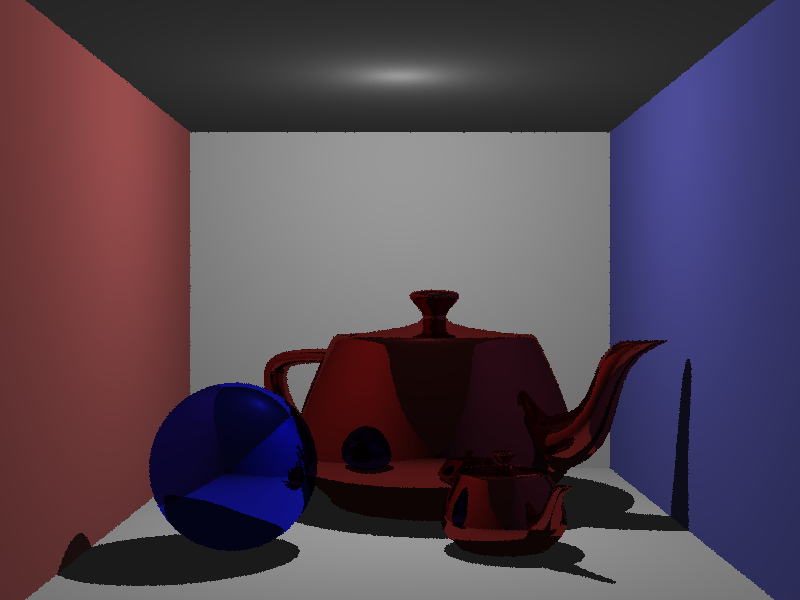

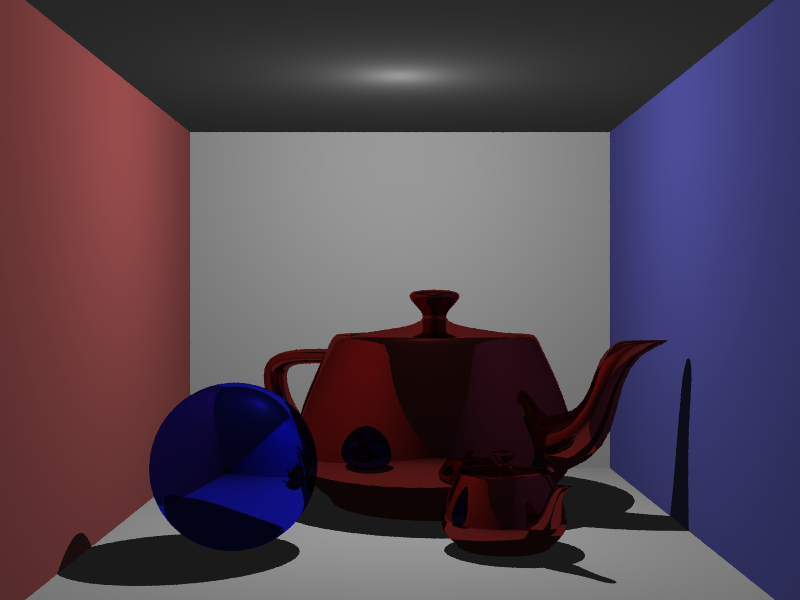

Here's the given scene. Again, the refraction and reflection are not using broken BRDFs. Not too broken, anyway. Images are (16t 1s 00:00:08) and (16t 10s 00:01:20), respectively. View originals as desired:

Proceed to the Previous Project or Next Project.

Hardware

Except as mentioned, renders are done on my laptop, which has:

- Intel i7-990X (12M Cache, [3.4{6|7},3.73 GHz], 6 cores, 12 threads)

- 12 GB RAM (DDR3 1333MHz Triple Channel)

- NVIDIA GeForce GTX 580M

- 750GB HDD (7200RPM, Serial-ATA II 300, 16MB Cache)

- Windows 7 x86-64 Professional (although all code compiles/runs on Linux)