CS 6620.001 "Ray Tracing for Graphics" Fall 2014

Welcome to my ray tracing site for the course CS 6620.001, being taught at the University of Utah in Fall 2014 by Cem Yuksel.

Welcome fellow students! I have lots of experience tracing rays, and with graphics in general, and so I'll be pleased to help by giving constructive tips throughout (and I'll also try very hard to get the correct image, or say why particular images are correct as opposed to others). If you shoot me an email with a link to your project, I'm pretty good at guessing what the issues in raytracers are from looking at wrong images.

Hardware specifications, see bottom of page.

Timing information will look like "(#t #s ##:##:##)" and corresponds to the number of threads used, the number of samples (per pixel, per light, possibly explained in context), and the timing information rounded to the nearest second.

Project 14 - "Teapot Rendering Competition" (Round II)

The rendering competition is open to anyone who has taken the class in previous years. So I participated again.

It is worth noting that the raytracer I used is a nearly-complete rewrite of the raytracer used last year. Describing the changes is inconvenient (as I have forgotten them), and also well-beyond the scope here. Suffice to say, the rewritten version is much faster, and capable in different, but nearly-a-superset ways.

A few days before the competition, I was asked officially if I wanted to participate. I did, but there was a problem. I TAed the class this second year. So entering would be the TA competing against her own students. Cem acknowledged that that would be unfair, but didn't think it was a problem. By contrast, if I refused the invitation, I wouldn't be entering because I think my raytracer would trounce every student's. Which is not just a bit uppity.

I finally resolved the problem by determining that I would enter the competition, but if I won, I would give the prize to the first-place student (I talked it over with the other two second-round contenders, but I don't know what eventually happened).

A secondary (and perhaps more-important, if you think about it) problem was that I didn't have any scene in mind.

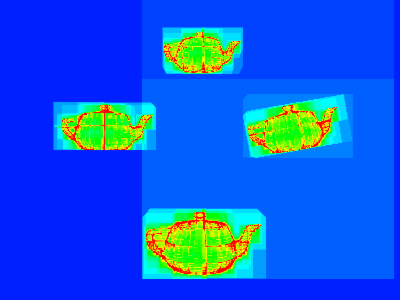

The first scene I came up with was making a teapot out of teapots. This necessitated implementing instancing. Here's one of the earliest working tests of instancing. The teapot is stored exactly once. Notice that transformations are supported. (Timing unavailable.)

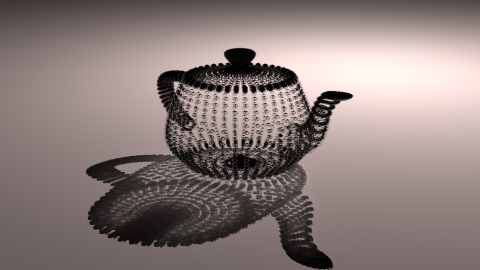

From here, it is pretty easy to make the teapot out of teapots. I wrote a six-line Python script to parse the OBJ file and output a "recursion point" at each vertex position. (Timing unavailable.) (Click for full res.)

The problem is that this is really boring.

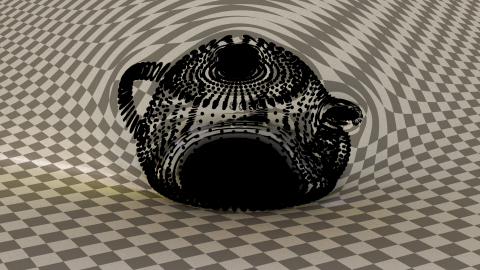

But then I got an idea. Remember the relativistic photon mapping one-off hack from project 13? In that scene, there is a single Schwarzschild black hole in the Cornell box. What if I made the entire teapot out of black holes!

You can actually calculate the orbit around a single source in closed form. If the black hole is not rotating (the case here), you can use that to find the curved path. Ultimately, I just used raymarching instead.

For the black hole teapot, there's more than one source, so closed-form evaluation becomes impossible, so we have to use raymarching anyway. There are several thousand vertices in the teapot mesh, and ideally, we shouldn't calculate the gravitational interaction with each one, at each raymarch step. Instead, I came up with a "distortion volume". This is just a big, vector-valued, 3D table. You plunk it down in the scene and compute the gravitational interaction at each grid cell. Then, when raymarching, you do an oct-linear lookup. This (statistically consistent!) approximation works splendidly.

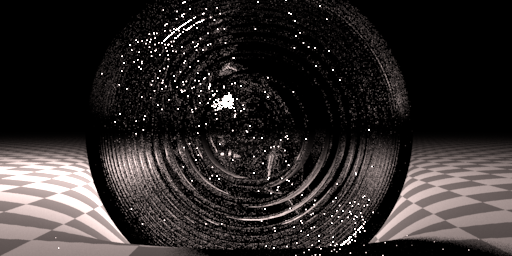

Here's an early test (no volume yet) of recreating the original work (timing unavailable):

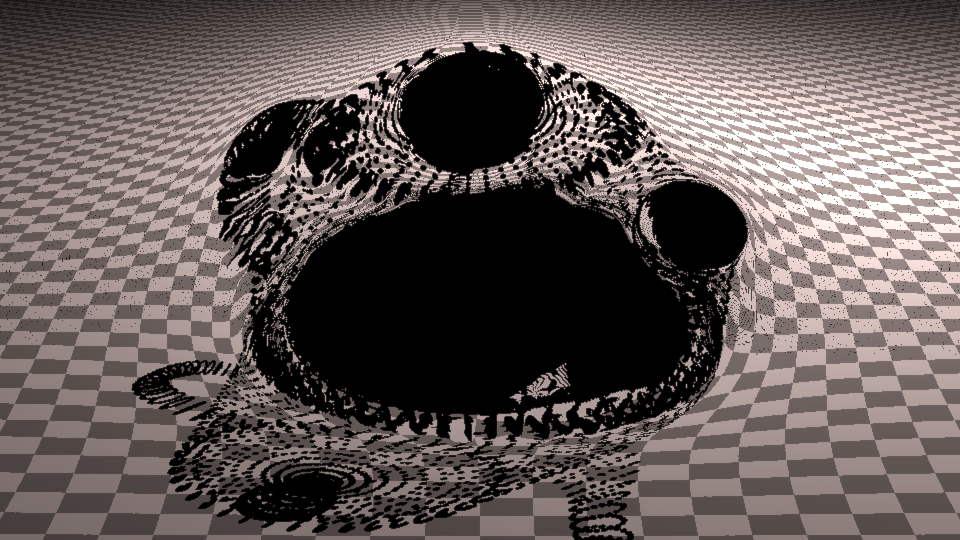

. . . and the teapot (timing unavailable):

Note: the shadow rays here ignore distortions, otherwise they would be impossible.

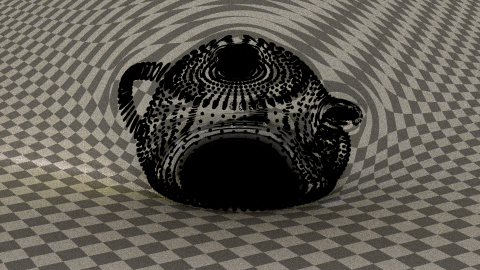

At this point, I discovered that the raymarching I was doing was incorrect. While the ray's position was being updated correctly, the direction was not. Rays would always point in the same direction, even if they orbited around the black hole! When I fixed this, I got the more realistic:

Here, I think I have a diffuse environment map.

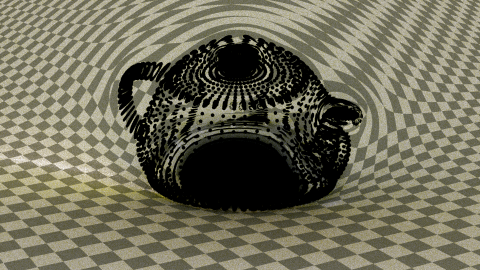

Using the forest environment map instead, I get (??t 81,^0s ??:??:??) (click for full res.):

The statistics look weird because the rendering was split over my new lab machine and on my laptop, vertically down the middle, and then composed by hand. A 480*270, 1 s/p image took my laptop 68s. My new desktop, which has a similar processor, I assumed would render about the same speed. So, I figured that using both at 81 s/p, a 1920x1080 image would render in 12.24hr. In reality, my laptop took 44383.519921s (~12.33hr), while the lab machine took just 17357.087029s (~4.82hr). So while I predicted the renders would be done at 12:14, the laptop finished at 12:27 and the lab machine finished at 04:56.

I added some basic postprocessing. I did two "despeckle" operations, changed saturation+80 / light+18, and then did another despeckle. This produces the final image submitted to the competition (click for full res.):

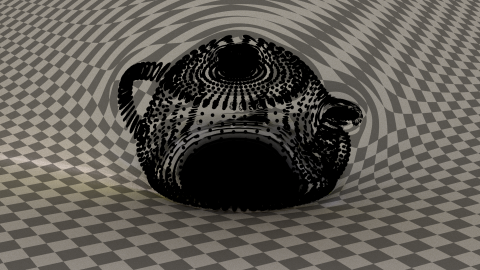

During the break, I set the lab machine to rendering a much higher quality image with 1225 s/p. This produces (timing unavailable) (click for full res.):

The major difficulty was in getting the transformations right, especially with the volume. Several times, I thought I had gotten it right, before I realized I hadn't. The recursion for instancing was particularly annoying. The BVH needs to be multi-scale and handle transformations gracefully. For speed, in the rewrite, I had removed all transformations from triangles, instead evaluating them at load time. This produces much-better BVHes, but it doesn't work once recursion is involved, so I had to undo it. Fortunately, the best of both worlds can be achieved. Identity transformations are optimized to no-ops, and triangle transformations are collapsed upward when possible.

A hilarious problem was also discovered. I noticed that scenes were using way too much memory. For example, the shattered teapot model from last year only just barely was fitting into 12 GB of RAM (it doesn't even have a million triangles, IIRC). I attributed this to the fact that I was storing a vector of transform samples, each of which was three 3x4 matrices, for each triangle. But, once I had undone this (from above, so that instead each object (e.g. a whole triangle mesh) stores a transform stack), the problem was not greatly improved.

Things came to a head on the night before the competition, as I was furiously implementing the distortion code. Suddenly everything just started crashing. Finally, I was able to trace the problem. See, since the rewrite uses my improved math library, which now vectorizes everything, alignment becomes not just important to performance, but absolutely critical to correctness. So I was using my aligned allocator. However, my aligned allocator had two problems.

One was that it was allocating a size for a base type, not the child type. Since child types are usually larger than parent types, this is a segfault waiting to happen. However, a second error caused it to allocate a factor of sizeof(T)too much memory. This had the effect of massively increasing memory use, and also hiding the first error except in pathological cases.

Proceed to the Previous Project or Next Project.

Hardware

Except as mentioned, renders are done on my laptop, which has:

- Intel i7-990X (12M Cache, [3.4{6|7},3.73 GHz], 6 cores, 12 threads)

- 12 GB RAM (DDR3 1333MHz Triple Channel)

- NVIDIA GeForce GTX 580M

- 750GB HDD (7200RPM, Serial-ATA II 300, 16MB Cache)

- Windows 7 x86-64 Professional (although all code compiles/runs on Linux)